Our research

Data science is an area of extensive research as new applications are emerging from industry with requirements for real time data analysis of data streams that arrive with high speed, high data volume and in a variety of data formats. Terabytes, even petabytes of data are generated each day in diverse formats such as structured data, plain text data, imaging data, audio and video data.

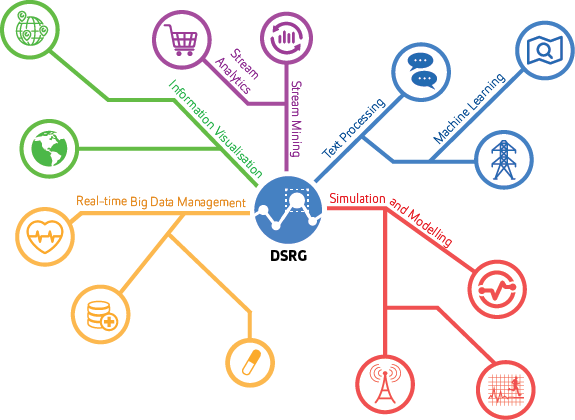

The centre is strongly involved in doing research in areas such as Real-time Big Data Management (RBDM), Stream Mining & Analytics (SMA), Text Processing & Machine Learning (TPML), Simulation & Modelling, and Information Visualisation (IV). The application areas where research carried out by the group is being applied are Smart Business, eHealth, Computational Sport Science, Telecommunication, Social Network, Power and Electricity and City Planning.The primary goal of the group is to design and implement new algorithms that will use a data driven approach to knowledge extraction and prediction of future behaviour.

Research areas and methods

BigData is a topic of great contemporary interest given the proliferation of BigData repositories that are now available online. BigData repositories are typically created from diverse and inter-linked online data and contain potentially valuable information that needs to be mined before it can be presented in usable form to an end-user.

The primary challenge in Data Analytics that has emerged in recent times is the management of BigData in order for real time analysis to be performed. Currently available mining methods cannot scale to petabyte and exabyte scale datasets which are currently available. Research in this area is required that will mine such large data repositories in real time while returning knowledge in the form of actionable patterns in real time that can be used by an end-user to support real time decision making. Some of the projects from the research group in this area are:

- Performance optimisation of semi-stream joins

- Processing of similarity joins in stream data

- Implementation of one-to-one, one-to-many, and many-to-many joins in stream data

- Optimisation of SQL queries

- Implementation and optimisation of semi-stream and full stream joins in clouds

- Parallelisation of semi-stream and full stream joins

Data streams are open ended collections of data that do not have a finite length. Classical data mining methods developed for static and fixed size datasets do not apply to data streams. Research in this area has flourished over the last 15 years or so but some open research issues remain. Some of these open issues include the methods that will detect concept drift. In most data streams changes in the underlying stochastic data distribution occur periodically and such changes need to be detected so that models can be kept up to date with the data in real time. The other major challenge comes from data streamlining in from BigData repositories. New scalable methods that can mine ultra-high data arrival rates are needed while ensuring that model accuracy is not compromised by the higher throughput rates that are achieved. Some of the sample projects from the research group are:

- Change detection of unsupervised data streams where class labels are either not relevant or not available

- Understanding the evolution of a stream in both time and space

- Capturing of recurrent patterns or motifs in stream data

- New frameworks for data stream mining to scale up to data arrival rates of terabytes per second

Data has always been a critical component of any business model. It has been used mostly to detect causal trends between two overt variables for the purpose of optimising an objective. In recent times, the extent of digitalisation has made it easy to collect abundance of data which has resulted in gigabytes of data not necessarily between variables with overt relations. This abundance of data has resulted in the discipline of data science which deals with extracting latent information embedded in seemingly unrelated or loosely related variables. Text processing goes a step further and deals with techniques to extract information embedded in texts expressed in a natural language. It is challenging as natural language is unstructured, however it can be successfully used for a huge range of applications. Some of the sample projects from the research group are:

- Social media text mining

- Location mining from Tweet messages

- Bullying detection on social media platforms

- Chronological topic tracking in Tweet messages

- Text classification

- Sentiment detection in movie reviews

- Sentiment detection in hospitality related service reviews

- Recommender analytics

- Recommend movie/wine based on keywords

- Conversational agents

- Use of “chatbots” to interact with users for information dissemination

- Natural text generation for conversational agents

- Document/s summarisation

- Collate/present information from annual reports

- Semantic web

- Designing linked data from NZ Government’s released open data initiative.

Modelling is the very core of data analytics. Modelling enables inferences to be made from data and support decision making. Modelling is very diverse area encompassing a number of different disciplines. In Computer Science modelling makes use of principles from the Mathematics, Statistics and Artificial Intelligence disciplines. Very often in Data Analytics a model that is constructed from the data group up, meaning that no pre-conceived ideas about the statistical distribution is made in advance. This enables models that are developed to generalize better to new incoming data than traditional method that assume an Apriori parametric statistical distribution.

Simulation is a concept that is closely connected to Modelling. A system that is to be studied is assumed to have basic behavioural properties and the system is studied by implementing a computer program that mimics the expected behaviour with the data that is available. Simulation can be used to discover system with various different types of data. For example we can simulate the behaviour of a Robot in navigating unfamiliar terrain that does not have a topological map.

Both Modelling and Simulation are well established areas in Data Analytics but new research is necessary to construct models and simulations for new types of systems that are constantly being created in today’s world.

Simulation and modelling approaches are widely used in problem solving. The value of this approach is its ability to transform a conceptual, mathematical or analytical model into a computational simulation model which can test hypotheses, collect data, analyse drivers, and generate emergent patterns. Computational simulations provide benefits as it can enable creation of an artificial representation of a system. SM approaches are flexible and capable of dealing with artificial or real-data sets to produce results that comprise emergent phenomenon.

At DSRG we employ hybrid simulation approaches that combine a range of analytical, mathematical, computational, and simulation techniques to investigate research problems.

Our focus area includes:

- Agent based modelling

- Artificial intelligence in healthcare

- Creation of dynamic networks for testing influence

- Network implementation and technology uptake

Information visualisation is at the heart of any data analytics exercise. Visualisation is a very powerful and effective way of disseminating knowledge. From simple charts to more sophisticated form such as Principal Component plots, Multidimensional Scaling plots, etc the core intention in a simple visual manner that will assist decision makers in making the right decision. Methods used range from Mathematical/Statistical methods to newly developed types of charts/diagrams developed in the data analytics for modelling network behaviour in computer and social media networks.

This again is a well-established area but requires new methods for systems that are specific to problems that arise in computer science.